Introduction to Delta Live Tables (DLT)

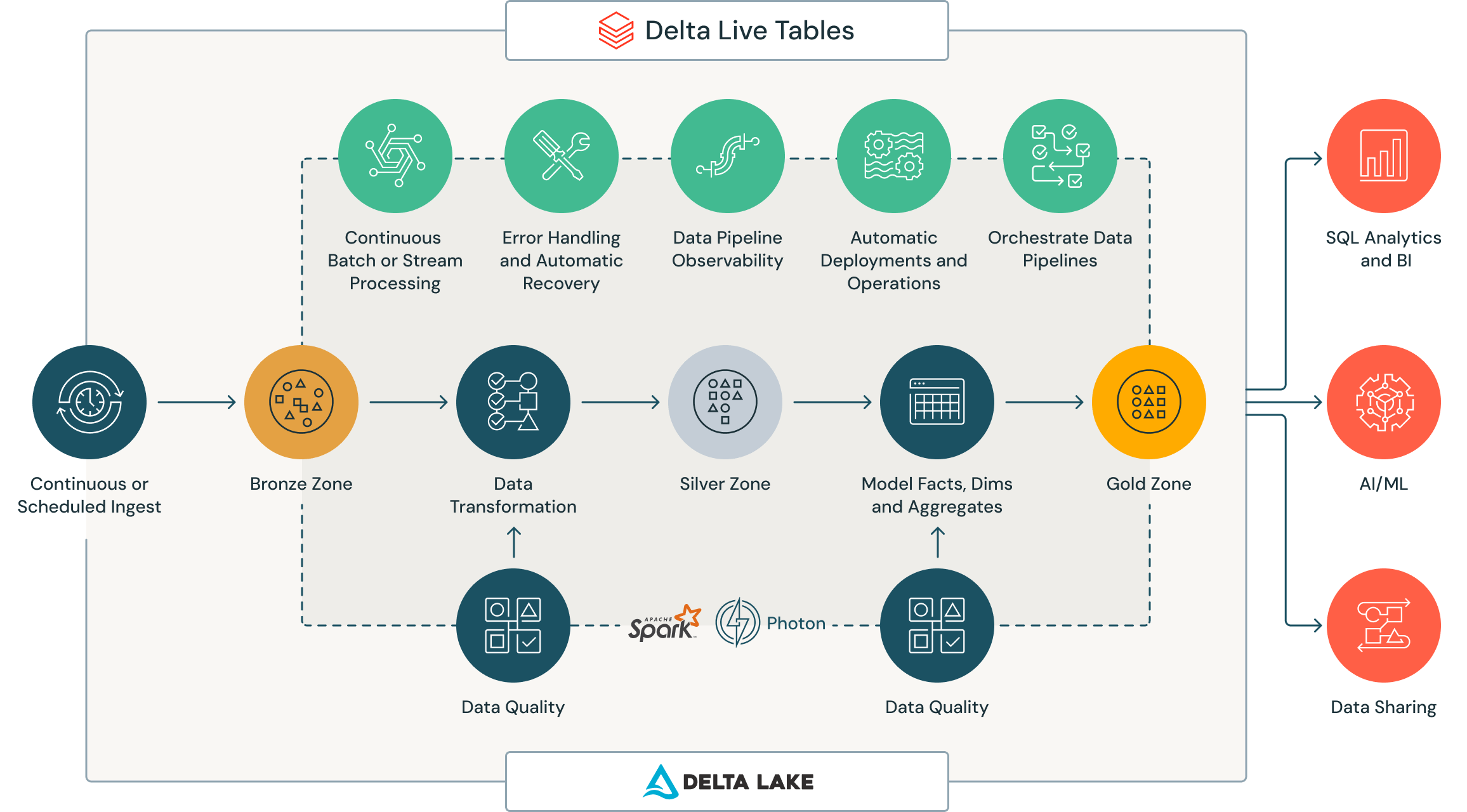

Delta Live Tables (DLT) is a powerful declarative framework designed to simplify the creation and management of data transformation pipelines on the Databricks platform. It allows data engineers to specify their desired data transformations in a high-level manner, letting DLT handle the complexities of task orchestration. This approach accelerates pipeline development and deployment by automating tasks such as cluster management, data quality monitoring, table optimization, and error handling. DLT can process data in both batch and real-time (streaming) modes, making it versatile for various data processing needs[1][4].

What Will You Learn in This Blog Post?

This blog post will provide an overview of Delta Live Tables, including:

- Key features and functionalities of DLT.

- Examples of how DLT can be applied in real-world scenarios.

- Important points to remember when using DLT.

- A comparison of different versions of DLT.

Key Features of Delta Live Tables

DLT offers several advanced features that enhance its utility for data engineers:

- Auto Loader: Automates the ingestion of new data from a Data Lake directory by reading only new files. This reduces the overhead of manual data ingestion tasks[2].

- Change Data Capture (CDC): Supports incremental data processing, allowing efficient propagation between different data layers (bronze, silver, gold). This feature is crucial for maintaining up-to-date datasets without reprocessing entire datasets[3].

- Slowly Changing Dimensions Type 2 (SCD Type 2): Enables data versioning by keeping both old and new records after updates. This is useful for maintaining historical data integrity[3].

- Self-Recovery: Automatically recovers from pipeline failures, ensuring robust and reliable pipeline operations[1][4].

- Data Quality Monitoring: Allows users to define and automatically monitor data quality rules, identifying and addressing problematic records efficiently[1][2].

- Table Optimization: Automates table optimizations and maintenance tasks to improve performance[1].

Application Example

Consider a scenario where a retail company needs to process large volumes of sales transactions daily. Using DLT, the company can set up a pipeline that:

- Automatically ingests new transaction files using Auto Loader.

- Processes these transactions incrementally with CDC to update sales reports without reprocessing all historical data.

- Maintains historical records using SCD Type 2 for accurate trend analysis over time.

- Monitors data quality to ensure that only valid transactions are processed.

This setup not only reduces manual intervention but also ensures timely and accurate reporting.

Important Points to Remember

- Declarative Approach: DLT’s declarative nature simplifies pipeline creation by allowing engineers to focus on what needs to be done rather than how it should be executed[4].

- Version Differences: The Community, Pro, and Advanced versions of DLT offer varying levels of features. The Pro and Advanced versions include additional functionalities such as SQL and Python APIs, streaming table support, detailed observability metrics, access control, and improved scaling capabilities[5].

- Performance Optimization: Using Foton, an integrated processing accelerator with Spark, can significantly enhance the performance of complex pipelines[6].

Conclusion

Delta Live Tables provide a robust framework for building efficient ETL pipelines on Databricks. By automating many aspects of pipeline management and offering advanced features like CDC and SCD Type 2 support, DLT empowers data teams to deliver high-quality data solutions quickly. Future posts will delve deeper into creating pipelines from scratch, working with SCD Type 2 changes, and exploring the logs generated by these pipelines.

Citations: [1] https://synccomputing.com/databricks-delta-live-tables-101/ [2] https://www.databricks.com/product/delta-live-tables [3] https://docs.databricks.com/en/delta-live-tables/cdc.html [4] https://learn.microsoft.com/en-us/azure/databricks/delta-live-tables/ [5] https://www.youtube.com/watch?v=IkntMq2INxo [6] https://www.linkedin.com/pulse/delta-live-tables-databricks-when-should-you-use-them-joshi [7] https://docs.databricks.com/en/delta-live-tables/index.html [8] https://www.databricks.com/product/pricing/delta-live